The U.S. Justice Department is investigating a county's child welfare system to determine whether its use of an artificial intelligence tool that predicts which children could be at risk of harm is discriminating against parents with disabilities or other protected groups.

Lauren Hackney feeds her 1-year-old daughter chicken and macaroni during a supervised visit at their apartment in Oakdale, Pa., on Thursday, Nov. 17, 2022. Lauren and her husband, Andrew, wonder if their daughter’s own disability may have been misunderstood in the child welfare system. The girl was recently diagnosed with a disorder that can make it challenging for her to process her sense of taste, which they now believe likely contributed to her eating issues all along.

"They had custody papers and they took her right there and then," Lauren Hackney recalled. "And we started crying." Lauren Hackney has attention-deficit hyperactivity disorder that affects her memory, and her husband, Andrew, has a comprehension disorder and nerve damage from a stroke suffered in his 20s. Their baby girl was just 7 months old when she began refusing to drink her bottles. Facing a nationwide shortage of formula, they traveled from Pennsylvania to West Virginia looking for some and were forced to change brands. The baby didn't seem to like it.

Over the past six years, Allegheny County has served as a real-world laboratory for testing AI-driven child welfare tools that crunch reams of data about local families to try to predict which children are likely to face danger in their homes. Today, child welfare agencies in at least 26 states and Washington, D.C., have considered using algorithmic tools, and jurisdictions in at least 11 have deployed them, according to the American Civil Liberties Union.

What they measure matters. A recent analysis by ACLU researchers found that when Allegheny's algorithm flagged people who accessed county services for mental health and other behavioral health programs, that could add up to three points to a child's risk score, a significant increase on a scale of 20.

"We're sort of the social work side of the house, not the IT side of the house," Nichols said in an interview. "How the algorithm functions, in some ways is, I don't want to say is magic to us, but it's beyond our expertise and experience." In Pennsylvania, California and Colorado, county officials have opened up their data systems to the two academic developers who select data points to build their algorithms. Rhema Vaithianathan, a professor of health economics at New Zealand's Auckland University of Technology, and Emily Putnam-Hornstein, a professor at the University of North Carolina at Chapel Hill's School of Social Work, said in an email that their work is transparent and that they make their computer models public.

Through tracking their work across the country, however, the AP found their tools can set families up for separation by rating their risk based on personal characteristics they cannot change or control, such as race or disability, rather than just their actions as parents. "This footnote refers to our exploration of more than 800 features from Allegheny's data warehouse more than five years ago," the developers said by email.In the same 2017 report, the developers acknowledged that using race data didn't substantively improve the model's accuracy, but they continued to study it in Douglas County, Colorado, though they ultimately opted against including it in that model.

Justice Department attorneys cited the same AP story last fall when federal civil rights attorneys started inquiring about additional discrimination concerns in Allegheny's tool, three sources told the AP. They spoke on the condition of anonymity, saying the Justice Department asked them not to discuss the confidential conversations. Two said they also feared professional retaliation.

People with disabilities are overrepresented in the child welfare system, yet there's no evidence that they harm their children at higher rates, said Traci LaLiberte, a University of Minnesota expert on child welfare and disabilities. Before algorithms were in use, the child welfare system had long distrusted parents with disabilities. Into the 1970s, they were regularly sterilized and institutionalized, LaLiberte said. A landmark federal report in 2012 noted parents with psychiatric or intellectual disabilities lost custody of their children as much as 80 percent of the time.

The AP found those design choices can stack the deck against people who grew up in poverty, hardening historical inequities that persist in the data, or against people with records in the juvenile or criminal justice systems, long after society has granted redemption. And critics say that algorithms can create a self-fulfilling prophecy by influencing which families are targeted in the first place.

The local algorithm could tag her for her prior experiences in foster care and juvenile probation, as well as the unfounded child abuse allegation, Chandler-Cole says. She wonders if AI could also properly assess that she was quickly cleared of any maltreatment concerns, or that her nonviolent offense as a teen was legally expunged.

"Rhema is one of the world leaders and her research can help to shape the debate in Denmark," a Danish researcher said on LinkedIn last year, regarding Vaithianathan's advisory role related to a local child welfare tool that was being piloted. Meanwhile, foster care and the separation of families can have lifelong developmental consequences for the child.

المملكة العربية السعودية أحدث الأخبار, المملكة العربية السعودية عناوين

Similar News:يمكنك أيضًا قراءة قصص إخبارية مشابهة لهذه التي قمنا بجمعها من مصادر إخبارية أخرى.

Opinion | Accuracy of leaked CSIS documents is not clear, so let’s not over react

Opinion | Accuracy of leaked CSIS documents is not clear, so let’s not over react

اقرأ أكثر »

US Justice Department is reportedly investigating TerraUSD (UST) collapseFBI and SDNY are probing the collapse of Terra’s algo-stablecoin – UST The agencies investigation is on similar lines as that of the SEC, which charged Do Kwon for committing securities fraud The United States Justice Department is reportedly investigating the collapse of the once-leading algo-stablecoin – TerraUSD (UST). According to a report by Wall […]

US Justice Department is reportedly investigating TerraUSD (UST) collapseFBI and SDNY are probing the collapse of Terra’s algo-stablecoin – UST The agencies investigation is on similar lines as that of the SEC, which charged Do Kwon for committing securities fraud The United States Justice Department is reportedly investigating the collapse of the once-leading algo-stablecoin – TerraUSD (UST). According to a report by Wall […]

اقرأ أكثر »

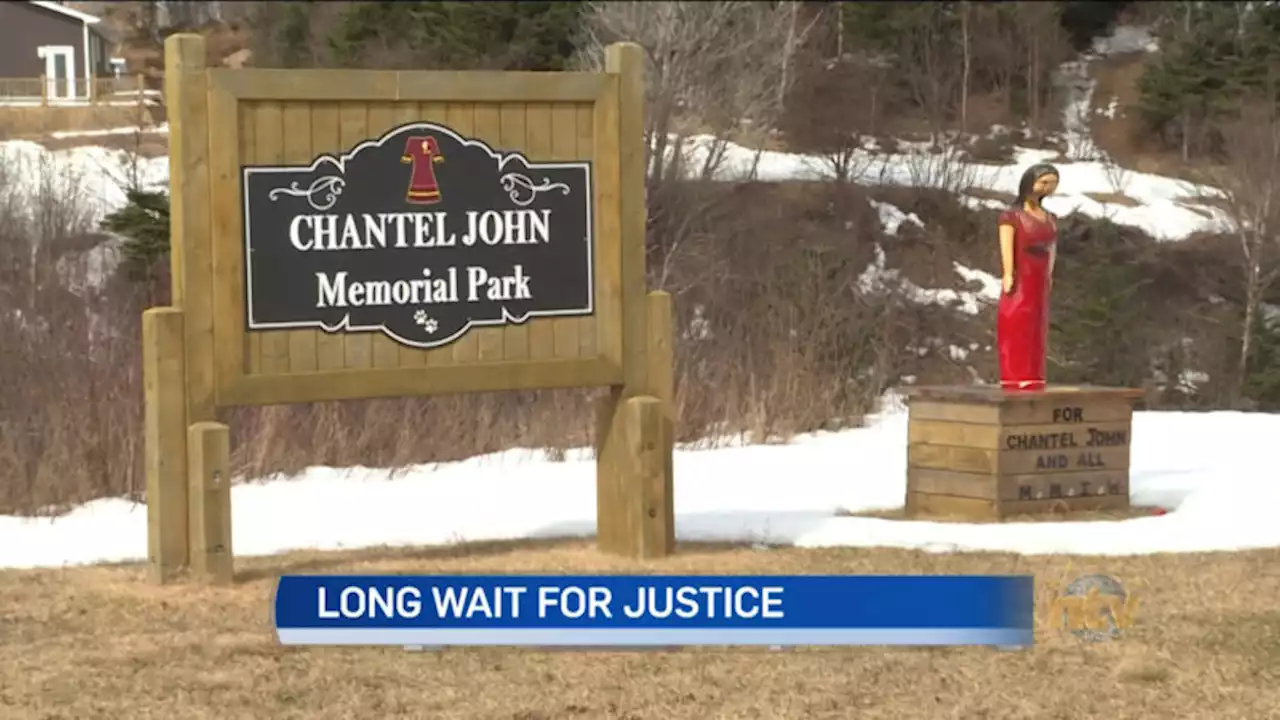

Miawpukek First Nation still waiting for justice four years after Chantel John’s death

Miawpukek First Nation still waiting for justice four years after Chantel John’s death

اقرأ أكثر »

Witness to altercation involving Justice Russell Brown says he followed her to hotel roomBody-cam footage and a written police report show that three witnesses to Justice Brown’s conduct at an Arizona resort told mostly the same story as that reported by a former U.S. Marine

Witness to altercation involving Justice Russell Brown says he followed her to hotel roomBody-cam footage and a written police report show that three witnesses to Justice Brown’s conduct at an Arizona resort told mostly the same story as that reported by a former U.S. Marine

اقرأ أكثر »

My statement stands, Supreme Court justice says of alleged 'unwanted touching'Russell Brown says he did nothing wrong prior to an alleged altercation that triggered a complaint to the Canadian Judicial Council.

My statement stands, Supreme Court justice says of alleged 'unwanted touching'Russell Brown says he did nothing wrong prior to an alleged altercation that triggered a complaint to the Canadian Judicial Council.

اقرأ أكثر »

My statement stands, Supreme Court justice says of alleged 'unwanted touching'Supreme Court Justice Russell Brown continues to insist he did nothing wrong prior to an alleged altercation in Arizona that triggered a complaint to the Canadian Judicial Council.

My statement stands, Supreme Court justice says of alleged 'unwanted touching'Supreme Court Justice Russell Brown continues to insist he did nothing wrong prior to an alleged altercation in Arizona that triggered a complaint to the Canadian Judicial Council.

اقرأ أكثر »